In the blink of an eye, we have traversed a century of relentless innovation and technological marvels. From the abacus to the smartphone, our journey through time has been marked by an consistent hunger for progress. At the forefront of this evolution stands Artificial Intelligence (AI), a concept that has not only redefined the boundaries of human imagination but has also transformed the very landscape of our daily lives. Join us as we embark on a captivating exploration of this remarkable odyssey, tracing the trail of AI’s evolution from foundational calculation to the art of sophisticated prediction.

For centuries, people have struggled to understand the meaning that’s hidden in large amounts of data. After all, estimating how many trees grow in a million square miles of forest is one thing. Classifying what species of trees they are, how they cluster at different altitudes and what could be built with the wood they provide is a different thing. That information can be difficult to extract from a very large amount of data. Scientists call this “dark data”. It is information without a structure just a huge, unsorted mess of facts. To sort out unstructured data, humans have created many different calculating machines.

Over 2000 years ago, tax collectors for Emperor Qin Shihuang used the abacus — a device with beads on wires — to break down tax receipts and arrange them into categories. From this, they could determine how much the Emperor should spend on building extensions to the Great Wall of China.

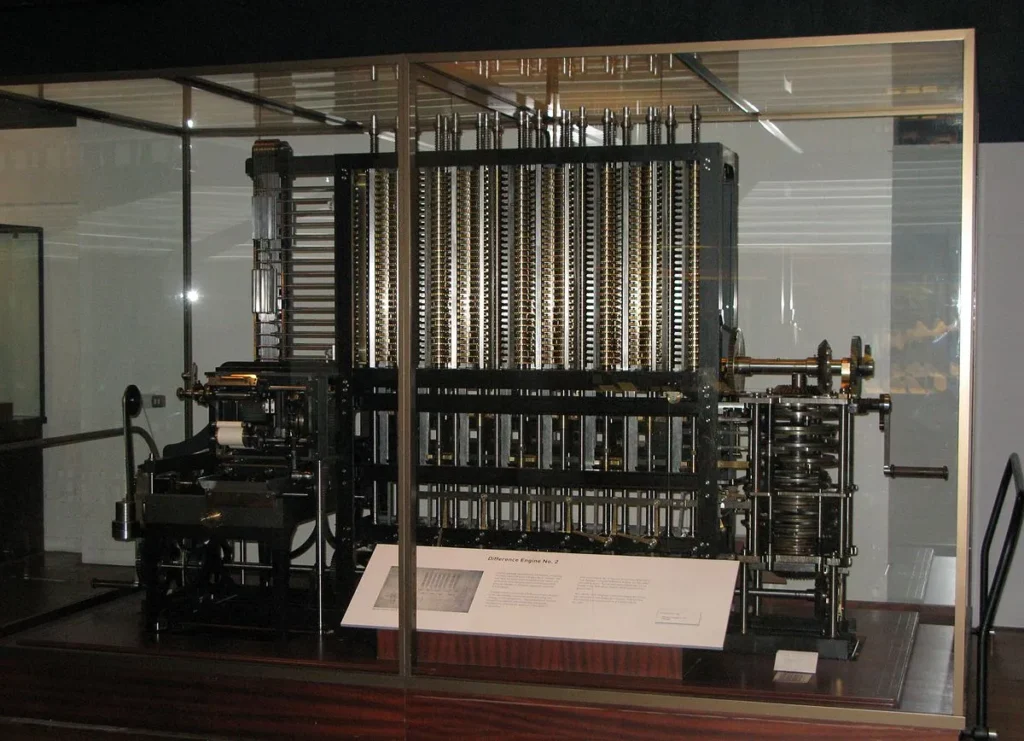

In England during the mid-1800s, Charles Babbage and Ada Lovelace designed (but never finished) what they called a “difference engine” designed to handle complex calculations using logarithms and trigonometry. Had they built it, the difference engine might have helped the English Navy build tables of ocean tides and depth soundings that could guide English sailors through rough waters.

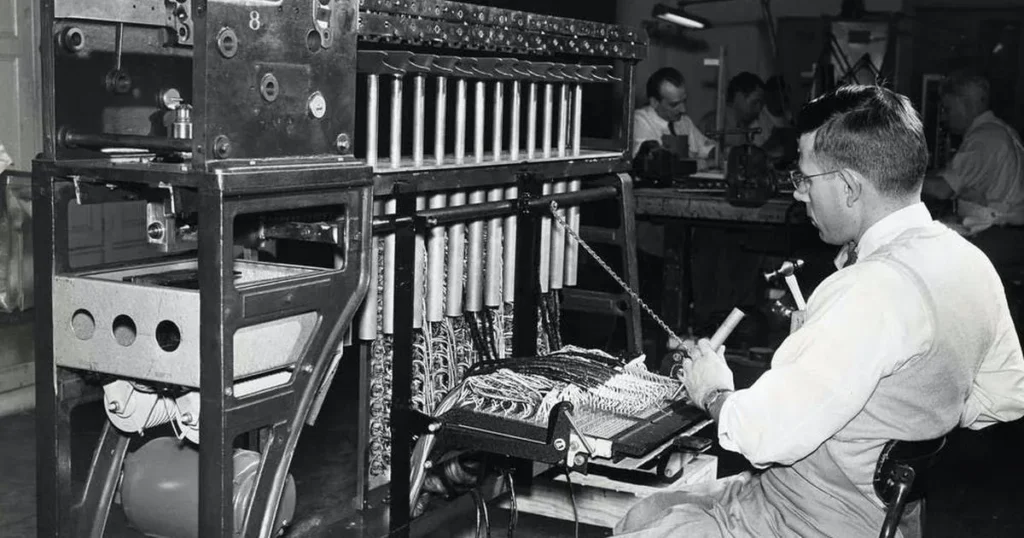

By the early 1900s, companies like IBM were using machines to tabulate and analyze the census numbers for entire national populations. They did not just count people. They found patterns and structure within the data — useful meaning beyond mere numbers. These machines uncovered ways that different groups within the population moved and settled, earned a living or experienced health problems — information that helped governments better understand and serve them.

The word to remember across those twenty centuries is tabulate. Think of tabulation as “slicing and dicing” data to give it a structure, so that people can uncover patterns of useful information. You tabulate when you want to get a feel for what all those columns and rows of data in a table really mean. Researchers call these centuries the Era of Tabulation, a time when machines helped humans sort data into structures to reveal its secrets.

Data analysis changed in the 1940s

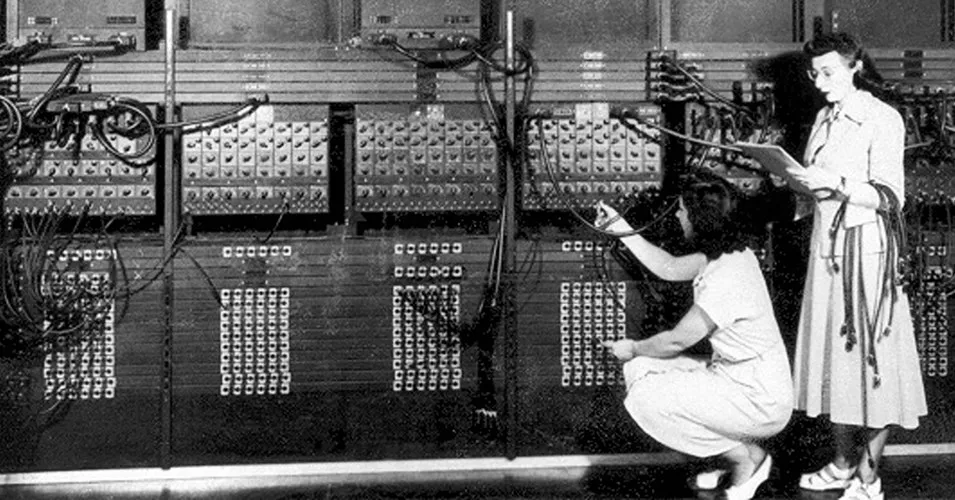

During the turmoil of World War II, a new approach to dark data emerged: the Era of Programming. Scientists began building electronic computers, like the Electronic Numerical Integrator and Computer (ENIAC) at the University of Pennsylvania that could run more than one kind of instruction (today we call those “programs”) in order to do more than one kind of calculation. ENIAC, for example, not only calculated artillery firing tables for the US Army, it worked in secret to study the feasibility of thermonuclear weapons.

This was a huge breakthrough. Programmable computers guided astronauts from Earth to the moon and were reprogrammed during Apollo 13’s troubled mission to bring its astronauts safely back to Earth.

It even drives the phone you hold in your hand. But the dark data problem has also grown. Modern businesses and technology generate so much data that even the finest programmable supercomputer can’t analyze it before the “heat-death” of the universe. Electronic computing is facing a crisis.

A brief history of AI

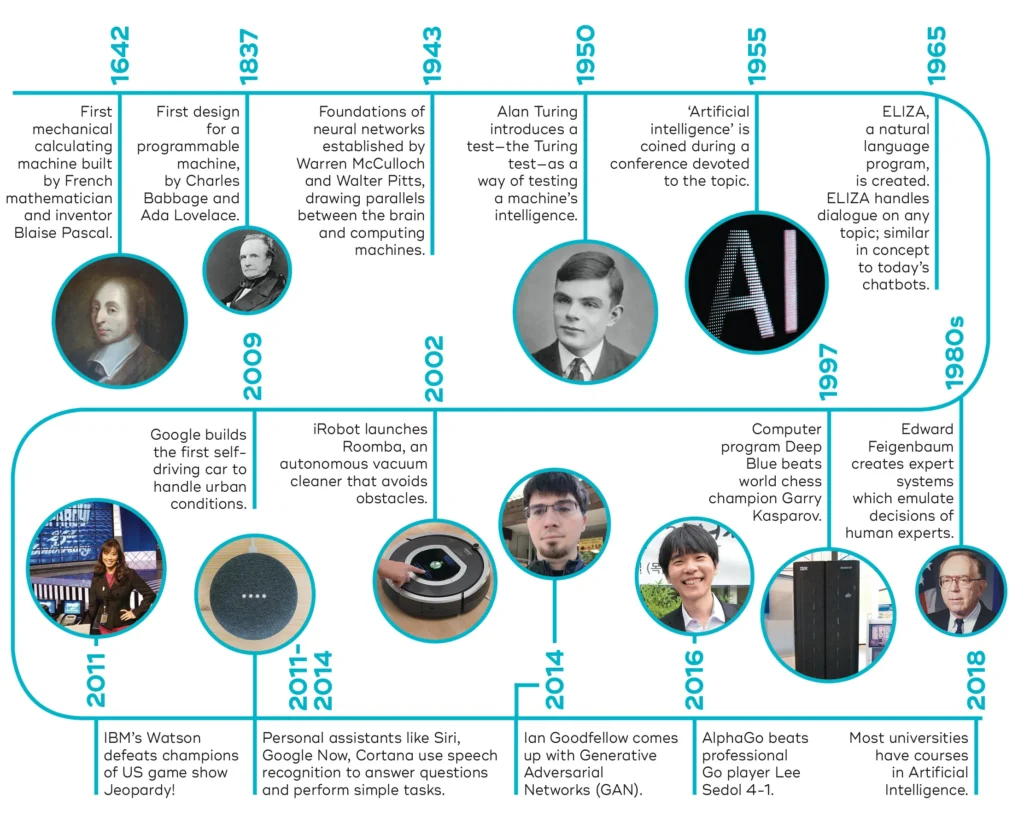

The history of artificial intelligence dates back to philosophers thinking about the question, “What more can be done with the world we live in?” This question lead to discussions and the very beginning of many ideas about the possibilities involving technology. Since the advent of electronic computing, there are some important events and milestones in the evolution of artificial intelligence to know about. Here’s an overview to get started.

The Era of AI began one summer in 1956

Early in the summer of 1956, a small group of researchers led by John McCarthy and Marvin Minsky, gathered at Dartmouth College in New Hampshire. There, at one of the oldest colleges in the United States, they launched a revolution in scientific research and coined the term “artificial intelligence”.

The researchers proposed that “every aspect of learning or any other feature of intelligence can be so precisely described that a machine can be made to simulate it.” They called their vision “artificial intelligence” and they raised millions of dollars to achieve it within 20 years. During the next two decades, they accomplished tremendous things, creating machines that could prove geometry theorems, speak simple English and even solve word problems with algebra. For a short time, AI was one of the most exciting fields in computer science.

But then came winter

By the early 1970s, it became clear that the problem was larger than researchers imagined. There were fundamental limits that no amount of money and effort could solve.

- Limited calculating power : Today, it is important for a computer to have enough processing power and memory. Every ad you see for companies like Apple or Dell emphasizes how fast their processors run and how much data they can work with. But in 1976, scientists realized that even the most successful computers of the day, working with natural language could only manipulate a vocabulary of about 20 words. But a task like matching the performance of the human retina might require millions of instructions per second at a time when the world’s fastest computer could run only about a hundred. By the early 1970s, it became clear that the problem was larger than researchers imagined. There were fundamental limits that no amount of money and effort could solve.

- Limited information storage : Even simple, commonsense reasoning requires a lot of information to back it up. But no one in 1970 knew how to build a database large enough to hold even the information known by a 2-year-old child.

As these issues became clear, the money dried up for The First Winter of AI.

The weather was rough for half a century

It took about a decade for technology and AI theory to catch up, primarily with new forms of AI called “expert systems”. These were limited to specific knowledge that could be manipulated with sets of rules. They worked well enough — for a while — and became popular in the 1980s. Money poured in. Researchers invested in tremendous mainframe machines that cost millions of dollars and occupied entire floors of large university and corporate buildings. It seemed as if AI was booming once again. But soon the needs of scientists, businesses and governments outgrew even these new systems. Again, funding for AI collapsed.

Then came another AI chill

In the late 1980s, the boom in AI research cooled, in part, because of the rise of personal computers. Machines from Apple and IBM sitting on desks in people’s homes, grew more powerful than the huge corporate systems purchased just a few years earlier. Businesses and governments stopped investing in large-scale computing research and funding dried up. Over 300 AI companies shut down or went bankrupt during The Second Winter of AI.

Now, the forecast is sunny

In the mid-1990s, almost half a century after the Dartmouth research project, the Second Winter of AI began to thaw. Behind the scenes, computer processing finally reached speeds fast enough for machines to solve complex problems.

At the same time, the public began to see AI’s ability to play sophisticated games.

- In 1997, IBM’s Deep Blue beat the world’s chess champion by processing over 200 million possible moves per second.

- In 2005, a Stanford University robot drove itself down a 131-mile desert trail.

- In 2011, IBM’s Watson defeated two grand champions in the game of Jeopardy!

Today, AI has proven its ability in fields ranging from cancer research and big data analysis to defense systems and energy production. Artificial intelligence has come of age. AI has become one of the hottest fields of computer science. Its achievements impact people every day and its abilities increase exponentially. The Two Winters of AI have ended!